You might have recently heard people talking about “memory-safe” languages and how we all need to start using them. There is a loud contingent of people on LinkedIn and Twitter advocating that we move away from languages like C and C++ because they aren’t “memory-safe”. The reasoning is that memory-related vulnerabilities like buffer overflows, out-of-bounds read and writes, and “use after free” bugs account for most vulnerabilities historically.

Many of the loudest voices calling for the use of memory-safe languages are coming from government sources. There has been an unprecedented amount of focus on this specific problem from within the American government and its allies. In December 2023 the Cybersecurity and Infrastructure Security Agency (CISA), in collaboration with the FBI, the Australian Signals Directorate’s Australian Cyber Security Center, and the Canadian Cyber Security Center, issued a report. This report supports the drive by Five Eyes nations to encourage public and private sector organizations to reduce the number of software vulnerabilities that arise from memory safety bugs.

The government points at data from tech giants to prove this: Microsoft says that 70% of the CVEs they publish each year are due to memory-related vulnerabilities. Similarly, Google says that 90% of Android bugs are caused by out-of-bounds read and write bugs alone. So clearly, we still have a problem with older apps and their memory management issues. But is the Google and Microsoft data mentioned above really representative of the bug classes that most companies are seeing?

This was followed quickly in February 2024 by the Office of the National Cyber Director publishing “Back to the Building Blocks: A Path Towards Secure and Measurable Software“. Not to be outdone, the White House quickly published a “statement of support” for the OIDC report.

Clearly, the US government was determined to let us know that using C or C++ is tantamount to being a traitor.

It does make me wonder, though: Which vendor might be in the US governments ear saying their language is more secure?

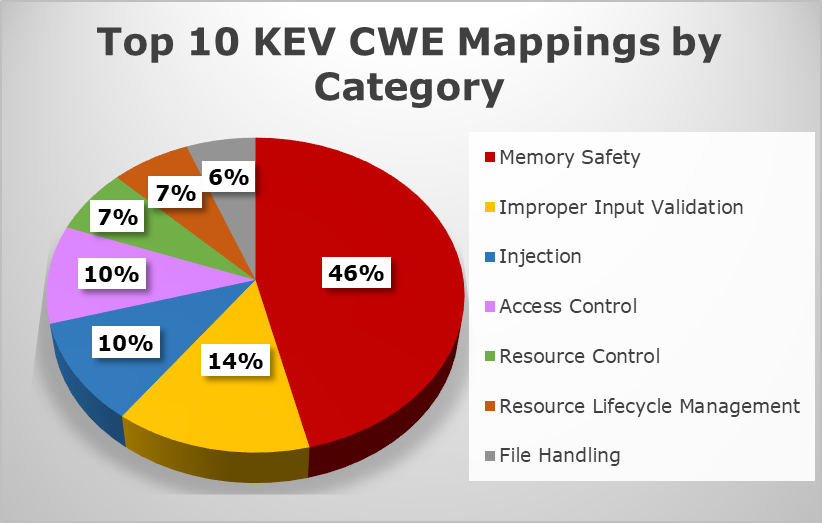

If you look at the MITRE Known Exploited Vulnerabilities data, you would think there are no bigger issues to solve than memory management ones!

The percentage of memory safety-related CVE’s published to the “Known Exploited Vulnerability” (KEV) catalogue in 2023 is huge! 46% of all CVE’s on the KEV are related to “Memory Safety” related CWEs.

Continuing the trend of government taking a stand on the subject, the Australian government recently published their own report advocating for the use of memory-safe languages in open-source projects.

Real Talk

While I’m happy that the government cares about open-source application security, this report combines two false narratives into one big mistruth:

First, that open-source is the bad guy, and second, that you are part of the problem if you’ve ever written any C or C++ code.

While I can appreciate advocating for a long-term strategy to use memory-safe languages, I am surprised and disappointed by the hype around the subject by people who frankly don’t know what the f**k they’re talking about. Like seriously, most of the voices i’ve seen pushing this theme on LinkedIn are not software engineers. They are influencers looking for a theme that non-technical people can get behind.

This blog post is meant to call bullshit on these shenanigans and expose them for what they are: Hyperbole, posturing, and bad policy.

While “memory-safe” languages can help mitigate certain bug classes, they are far from a panacea. There are so many things you need to look at when deciding what risks to tackle first: Are your applications internet-facing? Do your apps take input from an untrusted source? Has someone been updating your C and C++ code? Are your applications running on an un-patched windows box, or in a transient container? Where is your super-sensitive data stored? Are you using a WAF that can mitigate some of the vulnerability scanning noise and injection attempts? Are your apps writing, reading, or uploading files you give them? Is your application talking directly to a database, or an ORM, or not at all? There are a lot of things to take into account and what language the application is written in is just one of them.

This constant refrain that C and C++ are bad, is encouraging organisations to waste time on fixing problems they don’t have, while ignoring problems they most certainly *do* have.

And what’s up with the media’s singular obsession with Rust as (evidently) the only memory-safe language?! Who told them that?!? Many of the articles I’ve read recently directly imply that writing memory-safe applications with languages like C/C++ is a herculean task, if not outright impossible. This is bullshit. You can write C code that is memory-safe! It just takes more work. Kinda like mitigating XSS with CSP takes more work, or like sanitizing your input takes more work. Oh wait, I’m getting ahead of myself…

Are “memory-safe” languages the AppSec hill we want to die on?

Let me say again for the record: I think moving towards memory-safe languages is a great strategy for mitigating memory-related issues. So I am no Luddite insisting that we all write C code for everything and advocating for writing APIs in assembly, or webservers in Bash. Wait… I did do that last one… oops!

The OpenSSF is one of the loudest proponents of using Rust, which makes sense as they sponsor the Rust Foundation via the Alpha-Omega project. They specifically target Rust adoption strategically and I think much of their narrative around memory safety and Rust have permeated into a broader conversation about modern application security and influenced the groupthink.

The Linux kernel development team is indeed now using Rust as well as C. Rust only the second language that the core Linux team allowed to be used, and that decision makes a lot of sense. The Linux kernel is an enormously important asset to the world. The internet runs on Linux and it makes sense that as it evolves the team would start to migrate towards a memory-safe language like Rust.

But this is a special circumstance as the Linux kernel is one of the very few truly ubiquitous projects that continues to be written in C. Most of the rest of the world is now writing in other languages all of which are memory-safe.

When the government is advocating for application security strategy, it needs to take into account all factors not just historical data.

For example:

- What languages are we developing new applications in?

- What are the vulnerability classes affecting those applications?

- What context are our applications running in? Are they internet-facing?

- What data do these applications process? Is there personally identifiable information or health information involved?

Maybe let’s use resources we already have like the OWASP Top Ten to determine what vulnerability classes are really causing us the most harm today, right now, in 2024, not 2019.

To make this point even more, memory management vulnerabilities like buffer overflow fell off the OWASP Top Ten and are now sitting at number 11.

We have other, better hills to die on

My issue is that this focus on using memory-safe languages as a cure-all for application security is just a distraction. Memory-related issues like buffer overflow and use after free are not the bugs my team is dealing with in our core intellectual property. Instead, we are dealing with totally different classes of problems. We are trying to manage client-side and visibility problems brought on by the global migration to Javascript. We are dealing with cross-site scripting (XSS), insecure content management systems, malicious packages and CDNs, embedded credentials in customers code, constantly changing third-party dependencies and APIs, and several other issues that the government and LinkedIn influencers aren’t talking about.

The attack surface has moved from the datacenter to our customer’s browsers and I’m not seeing anyone write executive orders about that.

Refactoring C/C++ apps into memory-safe languages introduces its own challenges

I recently heard a CISO say, “we are going to rewrite our C, C++ and Assembly apps in Rust”. I was dumbfounded…. how did this person get their job if they are accepting LinkedIn hot takes as their application security strategy?

I was going to write a whole paragraph about how refactoring apps comes with its own set of security problems like introducing new bugs, especially for developers that have had to quickly learn a new language. But then Omkhar did a better job of detailing the issue in this interview.

The reality is that we are making progress

So let’s go back to those statistics from Microsoft and Google that declare that most vulnerabilities are memory safety related, and dig in a bit more. When Microsoft says that 70% of their vulnerabilities are memory safety bugs, what do they mean? What percentage of that majority are net new bugs? Are people writing so much C and C++ that the numbers are going up? It turns out that most of those bugs are historical. When CISA released its article “The Urgent Need for Memory Safety in Software Products” in December 2023, it was referencing articles by Microsoft and Google from 2019 and 2020 respectively.

So, it appears we are defining the current application security strategy based on historical vulnerability data. Google itself says that the number of memory safety bugs in Android have fallen dramatically in recent years.

In a web world, most of our core intellectual property is delivered via SaaS and cloud-native solutions. It’s here, inside the complex world of modern web applications, where much of that core IP is exposed to attackers. I have to ask myself, why are we spending so much energy talking about a class of bugs that have been consistently declining for years? AppSec teams and bug bounty researchers know experientially that these bug classes are going the way of the dodo.

Similarly, I think most teams get it that if they are going to write a new compiled tool, they aren’t going to use C or C++. Most CLI tools I use now have been written in Go, which by the way, is memory-safe too regardless of what the Rust fanatics tell you. Nuclei, Gitleaks, Dorky, ffuf, gau, github-subdomains, Naabu, Subfinder, Amass, Trivy, Bearer, Trufflehog and every Hakluke tool ever written, are all written in Go.

Because non-technical people have largely delivered the messaging on memory-safe languages, the messaging has been over-simplified.

We know that Rust is memory-safe because every media outlet in the world tells us it is, but what about other languages? For example, is Java memory safe? How about Javascript? (they both are)

PHP can’t possibly be memory-safe, right? Well, you don’t manage memory in PHP so it kinda is. The point here is that the messaging has been poorly executed, and we’ve spent too much time on nailing C developers to the proverbial cross.

Let me say this clearly and explictly for people: The use of C/C++, or any other language for that matter, does not equate to an inevitable memory catastrophe. In fact, with proper knowledge, discipline, and use of tools, one can write secure, memory-safe applications in these languages.

As I said above, the laser focus on memory-safe languages also distracts us from addressing a multitude of more pressing security issues. These issues can be resolved without having to rewrite a bunch of apps that aren’t even public facing. Here are some examples of what we should be focusing on instead:

- Cross-Site Scripting (XSS): We should prioritize using viable Content Security Policies (CSP) to address XSS vulnerabilities in older applications. Using modern web frameworks like React and Ember, By doing so, we can eliminate 90% of JavaScript client-side issues with one control.

- Unpatched CMS: For those using content management systems like WordPress, Drupal, or Magento, regular patching is critical. This helps to secure these platforms against known vulnerabilities.

- Insecure credentials: Hard-coding credentials in your app is an open invitation for hackers. If I can find them in your web root, so can malicious actors. Start using credential stores, and the SCM provided variable vaults.

- Out-of-Date Scaffolding: Stop using out-of-date scaffolding like “react-build-scripts” that introduce a staggering number of transitive vulnerabilities into your front end.

- Insecure cloud-native deployments: Many teams have written, built and deployed cloud-native apps without really taking the time to understand that there are new security challenges with this new model.

- Insecure functions: The number of teams that still don’t add Semgrep to their pre-commit git hooks, or add a linter to their IDE is higher than you think. Adding this one step will identify insecure functions like eval, inner_html, exec, “spawn with shell”, etc before you push it to master where its now everybody’s problem.

In closing…

Encouraging organizations to use memory-safe languages is a great idea for net new applications. However, if these same orgs are telling their over-taxed developers to re-factor C and C++ apps to “make them memory safe” they are wasting their time.

Let’s make sure that the real application security threats we all face are getting the right amount of airtime, and that we aren’t letting non-technical bureaucrats decide our global appsec strategies, okay?