What is offensive security?

In today’s complex cybersecurity landscape, organizations use a variety of techniques to fortify their defenses and use proactive measures to ensure the integrity of their systems. One of the ways that organizations can do this is by deploying “offensive security” functions. Offensive security operations are often carried out by ethical hackers, and cybersecurity professionals who use their hacking skills to emulate attacker behaviour. The idea is to identify issues by emulating this behaviour, so the organization can fix the issues that are found proactively.

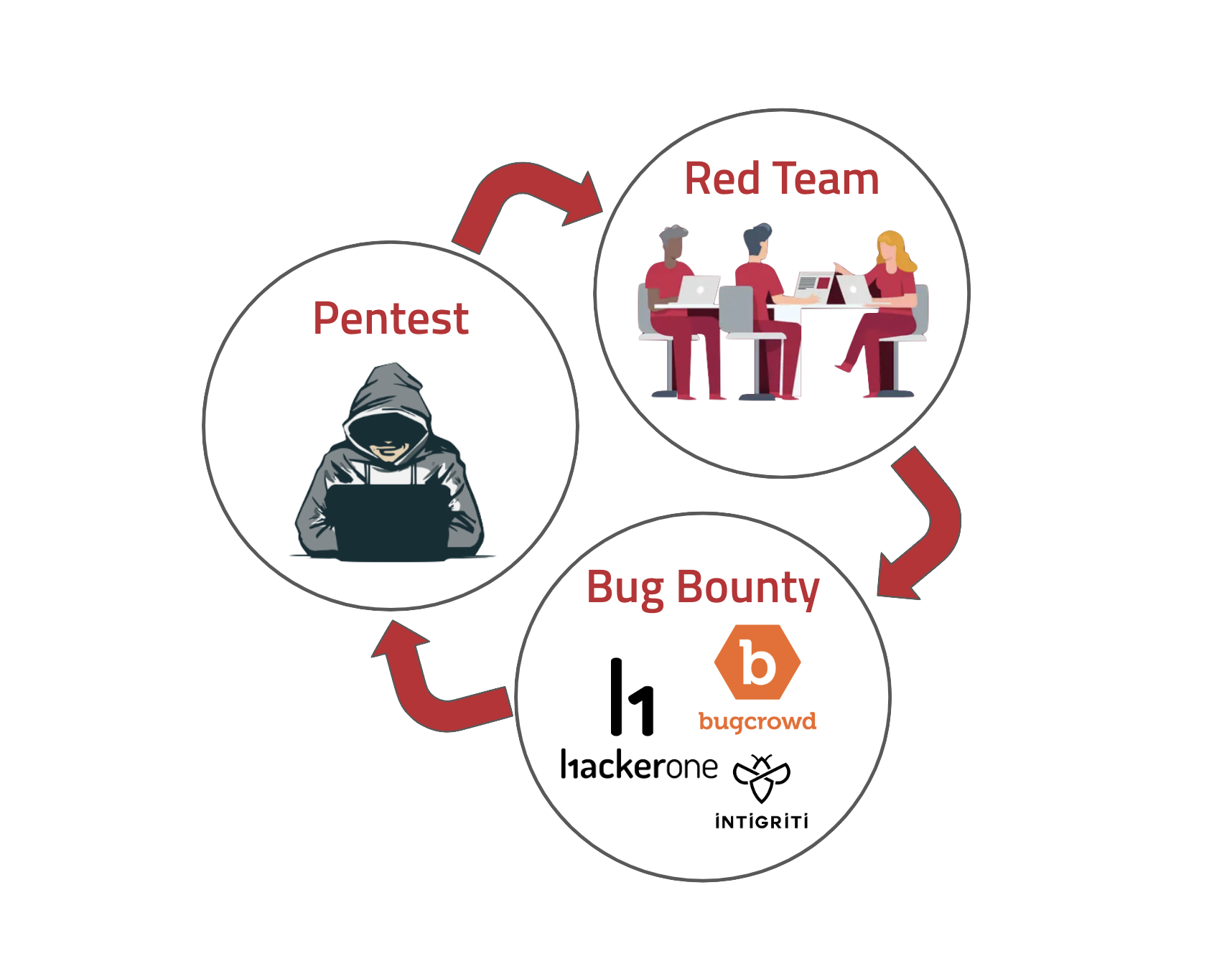

Among these techniques, penetration testing, bug bounty research, and red teaming are the three main offensive security practices used. Each of these offensive security strategies offers unique advantages and, when used in concert, they can significantly enhance an organization’s security posture, and create a really amazing feedback loop.

Lets be honest, not all orgs are mature enough to do all three, but for those that can, I highly recommend it. Let’s see why…

How to optimize your offensive security strategy

In my experience, bug bounty programs perform best within a triumvirate of offensive security practices including red teaming and penetration testing. If you over-index on any of the three individually, you don’t get the benefit of the others.

Let’s talk about each of these three offensive security practices and find out what they’re good for, and what they aren’t.

Penetration Testing: The Foundation

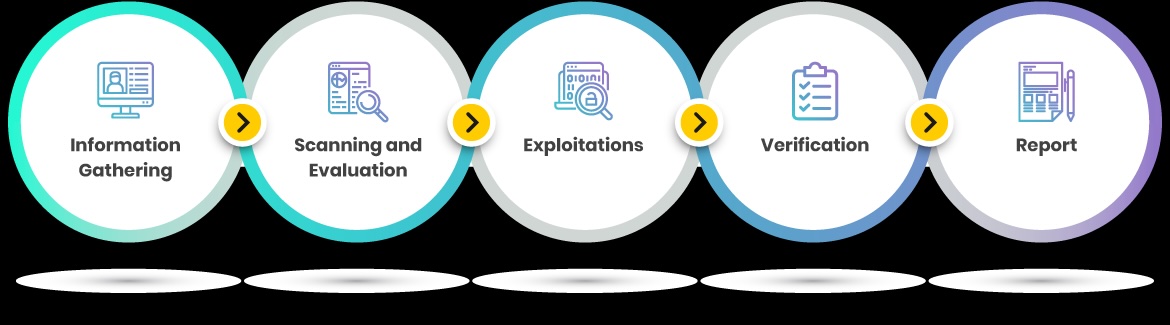

Penetration testing is often the first line of defense for many organizations, and its certainly the most recognized offensive security practice. This type of testing involves a simulated attack on a system to uncover vulnerabilities that an actual attacker might exploit. Penetration testing is broad in scope and doesn’t rely on stealth.

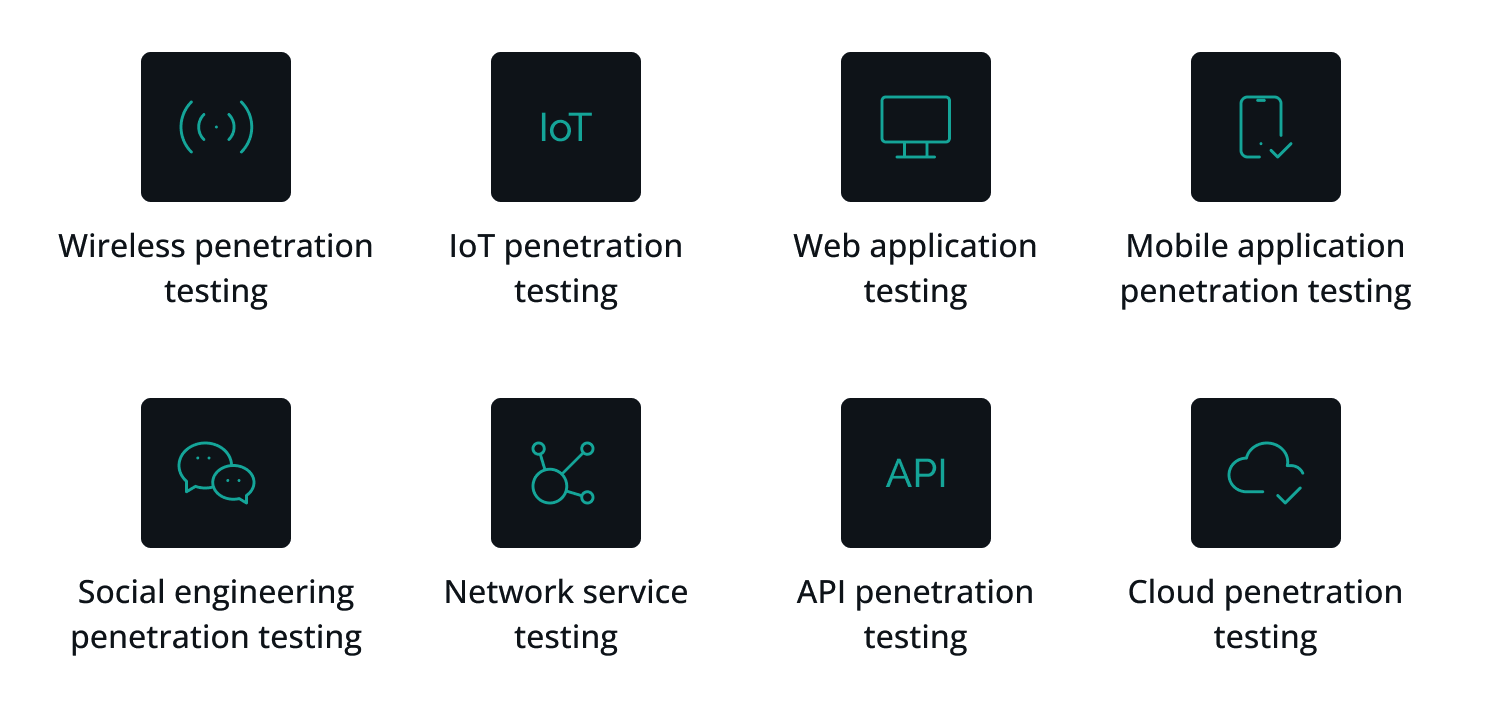

There are different types of pentests: Physical tests, web application tests, API testing, network testing, cloud testing, etc. Web application or API testing accounts for more than 75% of all modern penetration tests based on a recent survey of pentesters we know (very scientific).

Web application pentest engagements are typically 2 to 4 weeks but can be more for larger targets or environments. Pentesters are given a target, or a list of targets, and they have the creative license to go and explore those assets. Pentesting usually doesn’t involve much upfront planning; once the engagement kicks off, the researchers start active testing.

As part of their testing practices, pentesters will perform reconnaissance on the asset(s) and if the target is a web application they will typically explore all functions of the application. If the application has an authenticated portion to the site, the pentester will create an account and use a proxy to see how the account creation flow works. Testers will use the application and understand how a normal user will interact with the app. They’ll take notes about what types of data are used, how do users call their own data, and how can a user interact with another user’s data? If the application allows users to upload files, or documents, the tester will drill into these functions. If the app generates PDFs or other exported data types, the tester will also probe these.

OWASP has a great document explaining the pentest methodology which I’ve used for years.

At the end of all pentest engagements, the customer will receive a report that details all the findings. Sometimes those reports have remediation suggestions as well.

Two Types of Penetration Tests

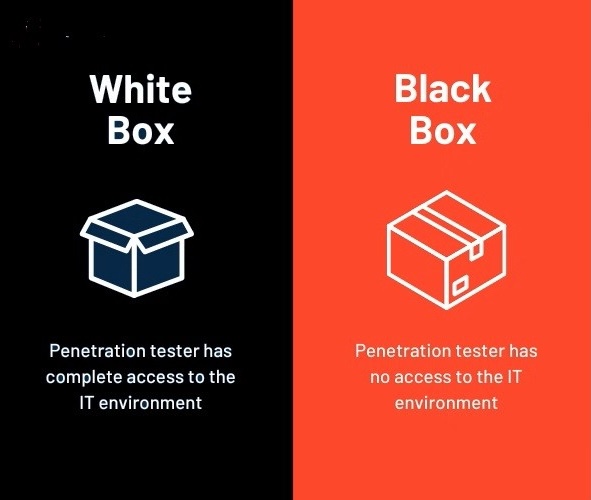

Blackbox testing

One starts with only the target name, and nothing else. This technique best emulates most external attackers, as they don’t have the luxury of having access to an authenticated user, or documentation, or source code, etc. This type of testing is called “black-box” testing.

Whitebox testing

The second type of pentest is a “whitebox” test, which gives the tester access to most, or all of the internal resources that the company has for the target. For example, in a whitebox test, it is common for the tester to have access to the application source code, an authenticated admin user to the application, as well as architectural documents and other internal documentation.

The Value of Penetration Testing

When penetration testing is driven by a genuine interest in security uplift, it can be the single best tool in your offensive security quiver. Penetration testing is probably the best way to identify security issues in a singular resource like a web app or API. For example, if you are building a SaaS platform, your most critical asset is that web portal that your customers use, and store their data in. Pentesting allows the tester to drill into every part of that application, in a way that other offensive functions can’t.

Unlike other offensive security practices, penetration tests can provide broad insights in a relatively short period: maybe as little as two weeks. Pentests can also deliver deeper, focused insights into core functions of an application like authentication, multi-tenancy isolation, data protection, database security, etc.

Finally, penetration testing is probably the easiest offensive security practice to approach if your company is new to the concept. It’s fairly straightforward to find and contract with an external pentesting company. The companies that deliver pentests will have a whole format and structure to their tests and methodology which make it easy for a startup to follow along with the process.

However, penetration tests can be expensive, typically costing roughly $20 to $35k per engagement. If you are sourcing a pentest and it is less than $15k, you might want to look around a bit more. Many companies offer cut-rate penetration testing, but you get what you pay for. Most cheap pentests lean heavily on automated scanners which don’t provide the same benefit that experienced human testers provide. Unfortunately, as mentioned earlier, many companies get pentests to meet compliance requirements so they shop for the cheapest price.

Pentesting for Compliance is just box ticking

Pentesting is also commonly the first offensive security practice that an organization encouters as its common for compliance reasons to have yearly pentests done.

Unfortunately, when pentesting is driven primarily (or entirely) by compliance requirements, it loses much of its value. If an organization is only doing the pentest to check a tickbox, then they probably aren’t interested in security uplift, right?

This is particularly obvious with startups who are trying to acquire a new customer. That customer will require that their vendors meet compliance specifications, or get SOC2, ISO27001 or FEDRAMP certified. There are new state-level frameworks like TXRAMP in the US.

My experience is that often the CTO of the startup, or maybe an engineering leader doesn’t see the value in security, so pursues a pentest engagement just to satisfy the compliance requirements. This “CTO phenomenon” is obvious as that startup will usually have no other security functions in place and source a pentest. They will say things like “we just need to do a quick web app pentest” which belies their ignorance. These companies aren’t interested in real security outcomes.

Bug Bounty Research: The Crowd-Sourced Approach

While penetration testing provides a fairly comprehensive high-level overview of potential vulnerabilities in a specific target, bug bounty research can provide deeper, more granular and specific insights across functions or workflows in an application.

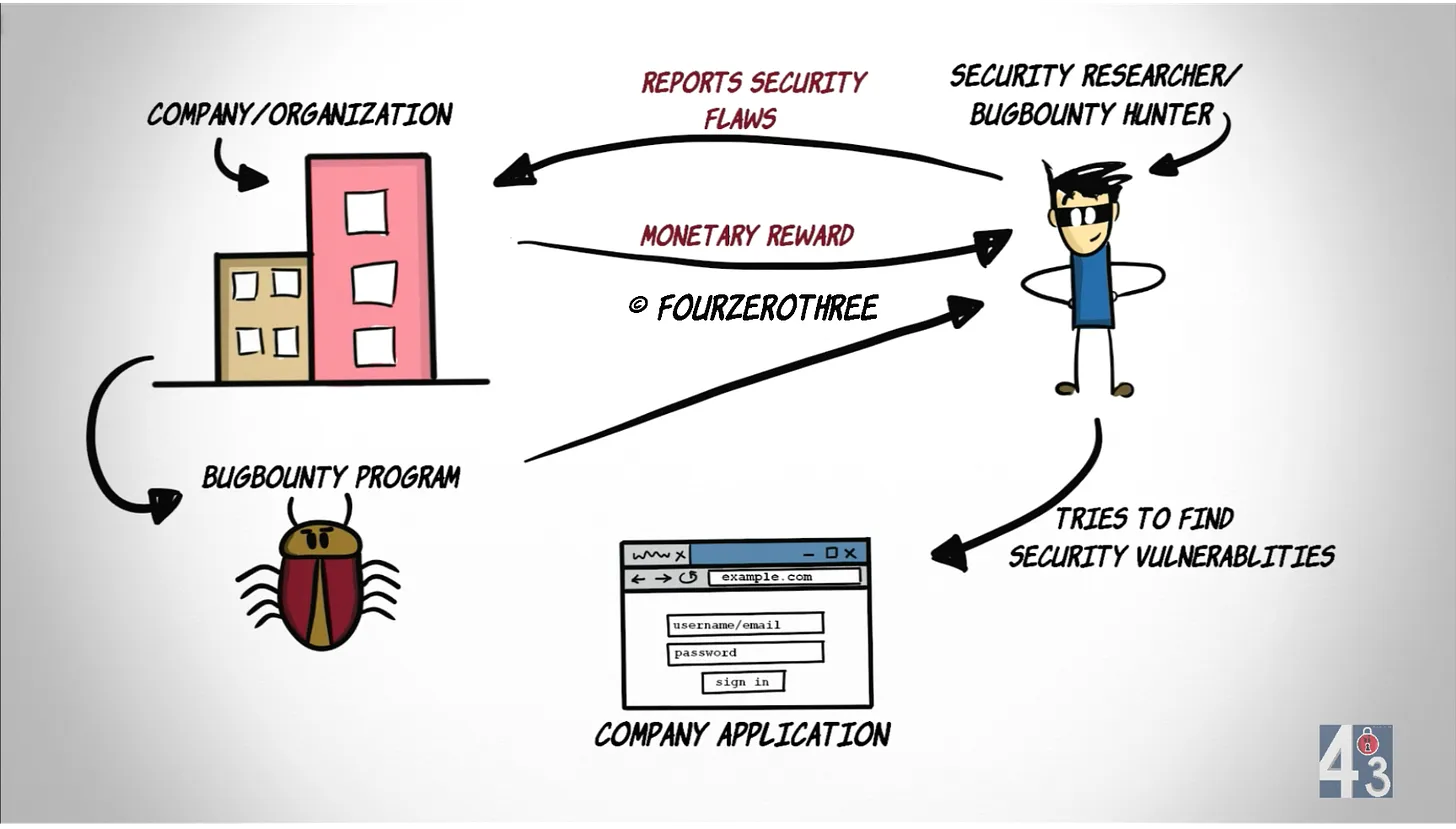

Companies will typically partner with a bug bounty platform like Bugcrowd, HackerOne, or YesWeHack who will manage the program for them. The program then invites external security researchers on the platform to find and report vulnerabilities in an organization’s systems or applications. Unlike pentesting or red teams, bug bounty is almost always provided by external researchers and a company’s internal employees are often barred from participating in the program. However, while Bugcrowd or Hackerone might triage submissions, the company itself needs to dedicate internal resources to manage the submissions as they are triaged.

Image courtesy of @fourzerothree

Not all bug bounty programs are run through a platform like Bugcrowd. Some well-funded organizations will create and host their own self-managed bug bounty programs. Meta, Google and Microsoft are examples of self-managed programs.

Regardless of whether the bug bounty program is hosted with a platform, or self-managed, each bug bounty program will have a scope. This scope describes in detail what the researchers can hack on. When researchers find bugs, their submissions can range from simple bugs to complex vulnerabilities. These programs usually incentivize researchers with rewards based on the severity of the vulnerabilities they discover. Critical priority findings, or P1’s, will often pay $5-$20k or more and some independent bug bounty researchers can do bug bounty exclusively and make a living.

Vulnerability Disclosure Programs (VDP)

Vulnerability programs are similar to bug bounty programs and the terms are sometimes used interchangeably. A VDP is a public way for a company to let security researchers know how to contact them. The primary function for a VDP is to provide a way for researchers to report a bug to a company.

VDPs sometimes list what resources are in scope similar to how a bug bounty scope would read. However, VDP’s are not encouragements to hack on company resources like a bug bounty is nor do VDPs pay researchers for their findings.

Public vs Private Programs

There are two types of paid bug bounty programs: Public and private. A public program will allow any researcher to join the program, but more importantly, if you search for a public program online you’ll find it. The Uber and Atlassian programs are both public and if you search Google you’ll easily find them.

Because the Uber bug bounty program is public, you can easily look up details about their program. For example, you can see the recent payouts that the Uber program has made recently.

Private bug bounty programs are different in that you can’t find them by googling typically. Instead, the company wants to keep the nature of their program quiet, and the only way you learn about the program is to get an invite from Bugcrowd, HackerOne, etc.

Typically, you will get invited to a private program after working on public programs and building up feedback and a reputation on that platform.

Bug bounty leverages diverse skill sets and thinking

One of the best things about bug bounties and its crowd-sourced approach is that it encourages a wide range of perspectives and expertise. This diversity of skills, techniques and workflows by the many researchers often leads to the unearthing of issues that internal teams might overlook.

I’ve been lucky enough to be on the program side a few times and it’s amazing to see the kinds of submissions you’ll get. These submissions tend to be very focused on a particular function or workflow. As such the findings themselves are structured differently than you’ll see with penetration test reports. The value these bug bounty submissions bring is different too. And because you typically have many researchers working on a program at the same time, the volume of the findings brings its own intrinsic value. For example, you might see a submission about a public exposure of a credential, and then another submission about a function that allows insecure uploads. Those two findings might actually be connected, and have some relationship to each other. If the security team that’s managing these submissions is cognizant they can begin to spot groups of vulnerabilities that are related. They can then look for patterns in the submissions that allow them to tackle more fundamental flaws than the submissions themselves might seem to point to.

I believe that more companies should start their own bug bounty programs, sooner. While many organizations pay for penetration tests, most don’t yet run bug bounty programs, and that’s a shame. If you are interested in starting your own bug bounty program, OWASP has a great resource for you, which you can check out HERE.

If you are interested in learning more about bug bounty, there’s a ton of “getting started in bug bounty” content on YouTube and other places. In particular, check out anything from @Jhaddix, @hakluke and the Critical Thinking podcast.

Red Teaming: The Attacker’s Perspective

In contrast to the other two methods, red teaming seeks to emulate specific real-world attack scenarios. This involves a simulated attack on a specific system, or function, and aims to achieve a certain goal such as bypassing an identity provider to gain unauthorized access.

Red teams are typically internal security groups, but occasionally you’ll see outsourced red teams. Unlike pentesting, red teaming is intrinsically built on stealth, so red teams will go out of their way to fly under the radar. Red team engagements are often much longer affairs than pentests and require thorough planning and execution. Red teaming tests an organization’s resilience against an actual attack and can reveal weaknesses in its defenses that aren’t apparent during routine checks or via pentesting. One other big difference between pentests and red teams is that the latter often provide feedback, and even architectural insights to security and engineering orgs within the company. Because red teams are internal security researchers they can work within the organization to shore up security in many different places.

Even when penetration testers operate with credentials to a target, and can log in as a user, they still have to test and document every vulnerability and security gap they come across. Red teams, on the other hand, typically operate in an “assumed breach” fashion. This means we start with the assumption that we have gained an initial foothold on the target. Because red teams are emulating real attacks, we will start our engagement assuming that initial access has been gained via social engineering or by buying credentials. Our job is to test the specific attack vector and we don’t want to waste time social engineering or buying access credentials for every operation.

Red Team Operational Model

Red teams typically operate in an “assumed breach” scenario where the team assumes that users can successfully be phished or socially engineered, so they don’t waste time with those tasks. Red teams are great at identifying if an organization is vulnerable to a specific type of attack. The red team will perform research and then craft a specific attack scenario. That’s what they do. What they should be doing is verifying if your organization is vulnerable to phishing or other attacks that we know are going to have a high rate of success.

Many red teams operate in a “white box” and authenticated way, so we will have access to internal systems and source code etc. However, this is not true for ALL red team engagements or all red teams.

The Synergy of the Three

Penetration testing provides a broad vulnerability analysis of systems and applications; Red teams identify if specific types of attacks will work on a company; and bug bounty provides crowd-sourced researchers that will go deep on whatever scope you give them.

In my experience, each of these practices informs the others, creating a feedback loop that results in a more robust security posture. And this is why I wrote this blog post. When a company strives to increase its security maturity, and embraces all of the offensive security practices mentioned it can have a profound benefit!

Insights from penetration tests, whether they are internally or externally sourced, can guide red team operations. Red team operations will often identify security gaps and issues outside of the scope of the red team engagement. These ancillary findings can be handed to pentesters to focus on. Similarly, findings from bug bounty programs can provide technical insights that red teams (or pentesters!) can use to build operations around.

For example, if a penetration test uncovers a potential vulnerability, the team running the bug bounty program can invite bug bounty researchers to scrutinize this specific feature or function. This will leverage the diverse perspectives and techniques of the crowd on the issue. If a significant vulnerability is discovered, the red team can then simulate an attack to fully understand the implications and guide the development of countermeasures.

However, this only works if your internal teams have access to the right resources and data. Make sure that your red team has access to your bug bounty submissions, and make sure that they are trolling those submissions for opportunities. Similarly, the targets you give your external or internal pentesters must be influenced by operational data from your red team and bug bounty programs.

The “Holy Trinity of Offensive Security”

For organizations that take their security posture seriously, penetration testing, bug bounty research, and red teaming can work together as a comprehensive security strategy. These methods are NOT standalone solutions but rather parts of a larger system that work together to fortify an organization’s defenses.

I have seen it myself while managing application security vulnerability programs and its an amazing thing to be a part of. If you want to learn more, please reach out. My details are below.